Generate Image to Video, Text to Video, and Image to Video with Wan 2.5

Why WAN 2.5 is Worth Using

Alibaba WAN 2.5 is a state-of-the-art text/image-to-video generation model available on Alibaba Cloud's DashScope platform. This powerful model produces high-quality videos in 480p, 720p, or 1080p resolution, complete with synchronized audio, from simple text or image prompts.

🔊 Native Audio + Video Sync

WAN 2.5 doesn’t just generate visuals — it also produces voices, ambience, and music perfectly in sync with lip movements and scene timing. No more manually aligning soundtracks or lip-sync!

🎛 Multimodal Control (Text, Image, Audio)

You can guide it with plain text, feed in a reference image, and even add an audio track (like a voice or music clip). This gives you much finer control over the style and feel of your final video.

🎥 High Quality Output

Supports 480p, 720p, and 1080p at 24fps. For short clips, the 1080p + synced audio combo already looks polished enough for marketing, social media, or demo reels.

💸 Cost-Effective Compared to Rivals

It’s been described as more lightweight and affordable than other high-end video models (like Veo 3), while still delivering synchronized audio + visuals.

🌍 Better Multilingual Support

WAN 2.5 performs especially well when prompts are written in Chinese or other non-English languages — with smoother lip-sync and stronger audio-visual alignment.

🌀 Stable Motion & Coherent Scenes

Camera pans, transitions, and moving subjects look much smoother. Scenes hold together without the strange flickering or “glitches” that older models sometimes produced.

To use Wan 2.5 in Promptus

1. Make sure Promptus is updated

Promptus bundles ComfyUI workflows, so just update Promptus to the latest version. The new WAN 2.5 API nodes will appear in the node search.

2. Get your API Key

Log in to Alibaba DashScope / Model Studio and copy your personal API Key. You’ll need this to run WAN 2.5.

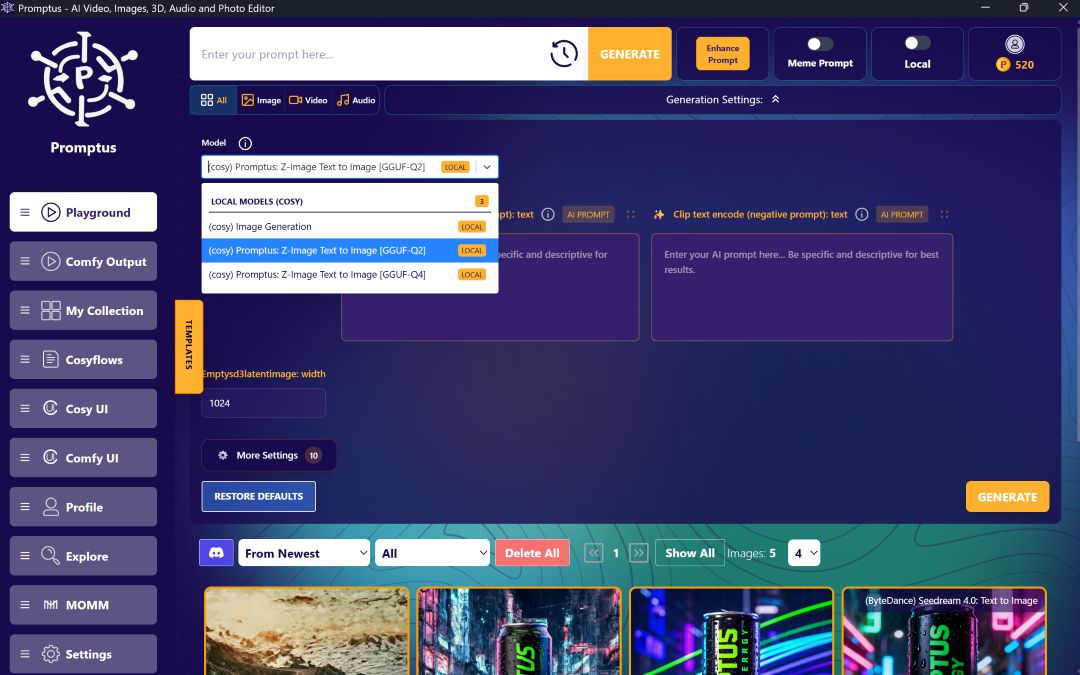

3. Load the starter workflow

- Open Promptus.

- Click Load Workflow and import one of the JSON files we shared:

- Text-to-Video

- Image-to-Video

- Text-to-Image

- The workflow will open in Promptus’s built-in ComfyUI editor.

4. Configure the WAN node

Inside the WAN node, set:

- API Key → paste your DashScope key.

- Model →

wan2.5-t2v-preview(text→video),wan2.5-i2v-preview(image→video), orwan2.5-t2i-preview(text→image). - Duration → 5s or 10s.

- Resolution → up to 1080p, 24fps.

- Audio → choose auto-generated, none, or reference audio.

- Prompt → enter your scene description in English or Chinese.

Wan 2.5 can generate videos that are up to 10 minutes long. See here to view more examples of Wan 2.5 video capabilities.

5. Run it inside Promptus

- Click Run Workflow.

- Your video/image renders directly in Promptus.

- Outputs are saved in Promptus ComfyUI Output folder — you can preview them or download the files.

6. Share with your friends

- You can save your prompts as presets in Promptus.

- Friends can open the same workflow, paste their API key, and run without extra setup.

%20(2).avif)

%20transparent.avif)