Wan 2.1 vs Hunyuan Video Complete Setup Guide for ComfyUI in 2025

This comprehensive comparison covers Wan 2.1 and Hunyuan Video—two powerful AI video generation models competing with Sora. Learn how to install both in ComfyUI for text-to-video and image-to-video generation using local or cloud GPUs.

Hunyuan Video Installation and Setup 📥

Hunyuan Video supports text-to-video and image-to-video generation. Follow these steps:

- Download Required Files

- Quantized Q8_0 models for both text and image-to-video (nearly original quality, smaller size).

- If VRAM is limited, choose smaller quantized variants.

- Optional Downloads

- LavaLamaFP8Scaled text encoder for extra VRAM savings.

- Original large model files for maximum quality (only if sufficient VRAM).

- Place Files

- Put downloaded files into appropriate ComfyUI model folders.

- The workflow uses a GGUF unit loader; press

CTRL+Bin ComfyUI if you prefer the standard diffusion model loader.

Hunyuan Video Configuration Settings 🎛️

- Testing (Lower Quality, Faster)

- Steps: 20

- Frame rate: 24 FPS

- Length: 49 frames (≈2 seconds)

- Resolution: Below 480p

- High-Quality Generation

- Steps: 30

- Frame rate: 24 FPS

- Length: 121 frames (≈5 seconds)

- Resolution: 480p or 720p (use cloud GPU for higher resolutions)

- Image-to-Video Workflow

- Add nodes for image input.

- Use LavaLlama3 ClipVision model for guidance.

- Download example images as needed.

Wan 2.1 Video Installation and Setup 📥

Wan 2.1 offers excellent video generation with a simpler workflow. Steps:

- Download Required Files

- Q8_0 quantized models for text-to-video and image-to-video.

- For limited VRAM, use smaller quantized variants.

- Optional Downloads

- Orange Q8_0 file (for 720p image-to-video; requires high GPU performance).

- Original large model files for max quality if VRAM permits.

- Workflow Notes

- Includes a negative prompt feature to control unwanted elements.

- Less complex node chain than Hunyuan but still powerful.

Wan 2.1 Configuration Settings 🎛️

- Testing (Lower Quality, Faster)

- Steps: 20

- Frame rate: 16 FPS

- Length: 33 frames (≈2 seconds)

- Resolution: Below 480p

- High-Quality Generation

- Steps: 30

- Frame rate: 16 FPS

- Length: 81 frames (≈5 seconds)

- Resolution: 480p or 720p

- Image-to-Video Version

- Uses ClipVisionH model.

- Minimal extra nodes compared to text-to-video.

Performance Comparison Results 📊

Local GPU Performance

- Wan 2.1: ~400 seconds for small test videos.

- Hunyuan Video: ~700 seconds (with

--cpu-vaefor AMD compatibility).

Cloud GPU Performance

- 480p Videos: 25–37 minutes on an L4 GPU.

- 720p Videos: Faster on L40s GPU.

- Relative Speed: Wan 2.1 takes roughly double the time of Hunyuan Video for equivalent settings.

File Sizes & Compression

- Hunyuan Video (24 FPS): Smaller file sizes despite higher FPS.

- Wan 2.1 (16 FPS): Larger file sizes per second due to lower compression.

- Hunyuan Image2Video: Largest file sizes per frame among these options.

Video Quality Assessment

- 480p Results

- Wan 2.1: Superior movement and motion realism.

- Hunyuan Video: Better image style and visual aesthetics.

- 720p Results

- Wan 2.1: Excellent movement, especially in image-to-video.

- Hunyuan Video: Preferred image style and overall visual quality.

- Image-to-Video: Wan 2.1 shows particularly natural movement and smooth transitions.

Optimization Recommendations 🔧

Balancing Quality and Time

- For Wan 2.1

- Use 20 steps instead of 30 for faster runs.

- Start with 2–3 second videos (32–48 frames) to reduce generation time to ~10–15 minutes.

- For Hunyuan Video

- Use GPU VAE when available.

- For AMD GPUs,

--cpu-vaecan help but slows down generation. - Test with quantized models before full-precision files.

- Cloud GPU Strategy

- Use L4 GPU for 480p testing.

- Upgrade to L40s GPU for 720p or higher.

- Monitor cloud costs vs. time saved for long runs.

- Model Choice

- Wan 2.1 excels in movement/motion realism.

- Hunyuan Video provides superior image aesthetics/style.

- Choose based on project needs and available compute.

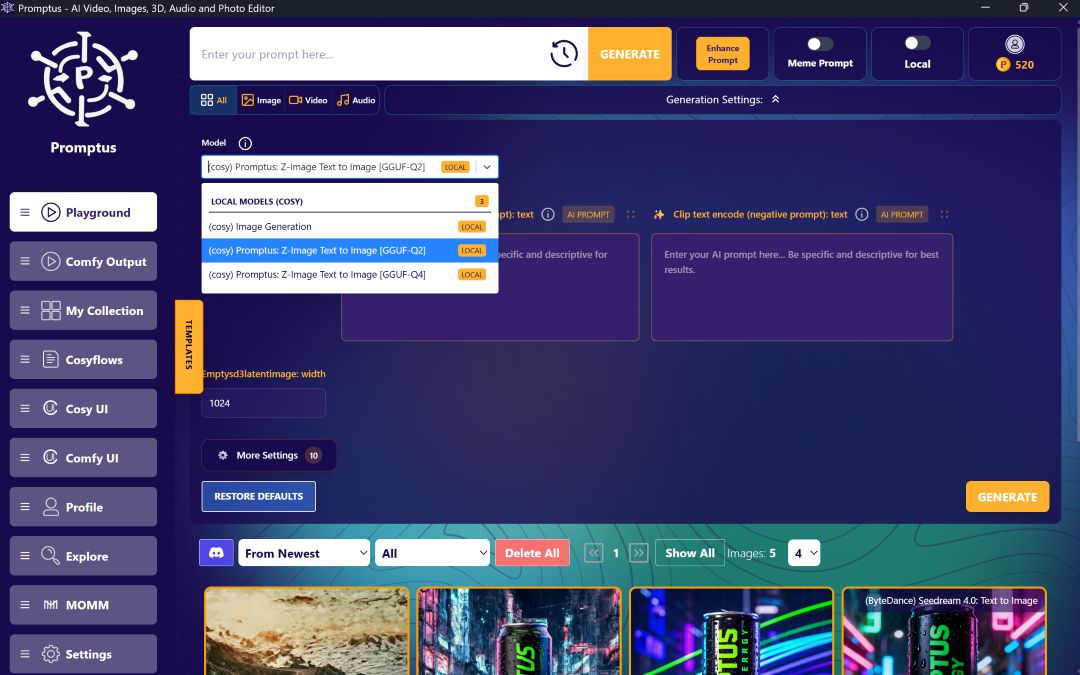

Beyond Video: Exploring Promptus 🌐

For a more accessible AI video generation workflow, consider Promptus:

- Browser-Based, Cloud-Powered: No local GPU constraints; run ComfyUI pipelines in the cloud.

- No-Code Interface (CosyFlows): Drag-and-drop workflow creation for video, image, and text models.

- Real-Time Collaboration & Publishing: Share and iterate with teams; integrate via Discord; publish templates.

- Built-In Model Access: Access advanced models (e.g., Hunyuan3D, Stable Diffusion variants) without manual downloads.

- Unified Workflows: Combine text-to-video, image-to-video, image editing, and other AI tasks in one environment.

Conclusion 🎯

Wan 2.1 and Hunyuan Video each offer unique strengths: Wan 2.1 for motion realism and natural movement, Hunyuan Video for visual style and aesthetics. By updating ComfyUI, installing the correct quantized models, and tuning configuration settings, you can generate high-quality AI videos locally or in the cloud.

For streamlined, collaborative workflows that simplify complex setups, leverage Promptus and its no-code CosyFlows interface to build, run, and share your video generation pipelines with ease. Happy generating! 🚀

%20(2).avif)

%20transparent.avif)