Wan 2.1 Local AI Video Generator: Complete Setup Guide

Wan 2.1 is a revolutionary AI video generation model that runs entirely on your local PC without censorship restrictions. This powerful tool generates high-quality videos from text descriptions and images while supporting Laura models for enhanced customization. 🎬

Setting Up ComfyUI And Wan 2.1

The installation process begins with downloading ComfyUI for your operating system. Users with Nvidia 50 series GPUs should download the GitHub version instead of the standard release. Windows users can select the portable package for easier setup.

Wan 2.1 offers two model versions: a lightweight 1.3 billion parameter model and a robust 14 billion parameter model. The 14B version requires more VRAM but delivers superior results. Each version includes separate models for text-to-video and image-to-video generation.

After downloading the required files, organize them in your ComfyUI folder structure. Place the text encoder in the models folder, the Wan video model in the diffusion model folder, and the VAE in the appropriate VAE folder. ⚙️

Creating Videos From Text Descriptions

The text-to-video workflow starts with loading the diffusion model and configuring basic settings. Set your image resolution and output frames — 69 frames produces approximately 5 seconds of video. The 408p model requires proportionally fewer frames.

The generation process uses a regular K-sampler with UniPC scheduler and 20 steps as default settings. Increasing steps improves output quality but significantly extends render time. Frame interpolation doubles the frame count by calculating intermediate frames between existing ones.

Effective Prompting Techniques

Successful video generation requires structured prompting with four key components:

- Initial scene description:

"A female warrior stands in the foreground of a crumbling futuristic city. She wears battle-worn sci-fi armor with glowing blue core and reflective metallic surfaces."

- Animation details:

"Her long black hair flows in the wind. She moves forward with fierce, focused expression."

- Camera movement:

"Camera follows warrior from low angle, tracking her forward motion."

- Art style:

"Hyperrealistic cinematic lighting, sharp detail, dramatic contrast, movie still aesthetics." 🎯

Using Laura Models For Enhancement

Laura models enhance video quality and style but require careful compatibility matching. The 1.3B and 14B parameter models use different Laura types that cannot be interchanged. Applying incompatible Laura models may decrease video quality.

Install Laura models in the ComfyUI Laura folder and refresh the interface with F5. Add Laura models through the workflow interface and adjust strength settings as needed.

Converting Images To Videos

Image-to-video generation provides maximum creative control over output results. This workflow requires the CLIP Vision model, downloadable through ComfyUI's model manager.

Upload images up to 720 pixels on the longest side — larger images automatically scale down while maintaining proportions. Set animation length between 40–69 frames depending on desired video duration. The video combine node sets frame rate at 16 frames per second. 📸

Camera movement prompts work better with helper Laura models when the base model struggles with specific motions like arc shots or crash zooms.

Enhancing Output Quality

Frame interpolation and upscaling workflows improve final video quality significantly. The interpolation process doubles frame rates from 16 to 32 FPS for smoother motion. Video upscaling requires the 4x Ultra Sharp model, installable through ComfyUI's model manager.

Separate workflows handle different enhancement needs:

- Combined frame interpolation + upscaling

- Upscaling only for videos with already high frame rates

Advanced Workflow Management

The anywhere nodes system eliminates workflow complexity by automatically routing connections between components. This approach creates cleaner, more manageable workflows without tangled connection lines.

Basic settings panels consolidate key parameters including resolution, frame count, sampling steps, and model selections for streamlined operation.

Conclusion 🚀

Wan 2.1 represents a significant advancement in local AI video generation, offering professional-quality results without cloud dependencies or content restrictions. The combination of text-to-video and image-to-video capabilities, enhanced by Laura model support, provides creators with comprehensive video generation tools.

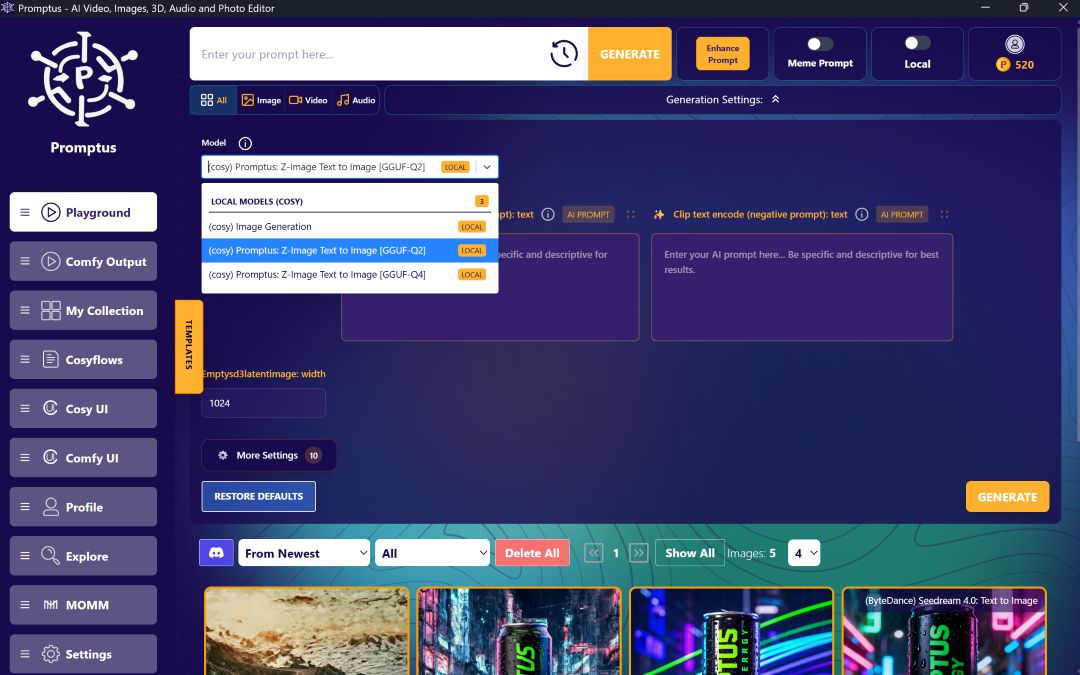

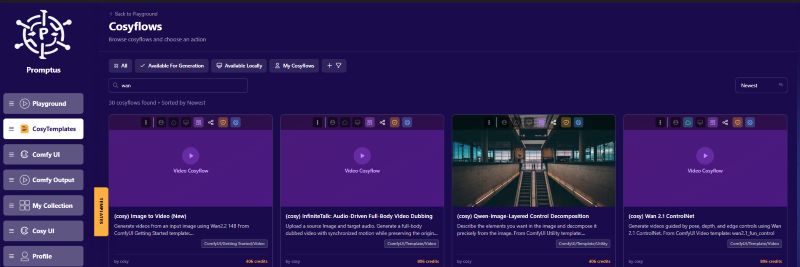

For creators seeking even more powerful solutions, Promptus offers a browser-based, cloud-powered visual AI platform that simplifies ComfyUI with a no-code interface called CosyFlows. Promptus provides real-time collaboration, built-in access to advanced models like Gemini Flash, HiDream, and Hunyuan3D, plus Discord integration and workflow publishing capabilities.

%20(2).avif)

%20transparent.avif)