LM Studio and ComfyUI Integration: Enhanced Vision Models and Network Features

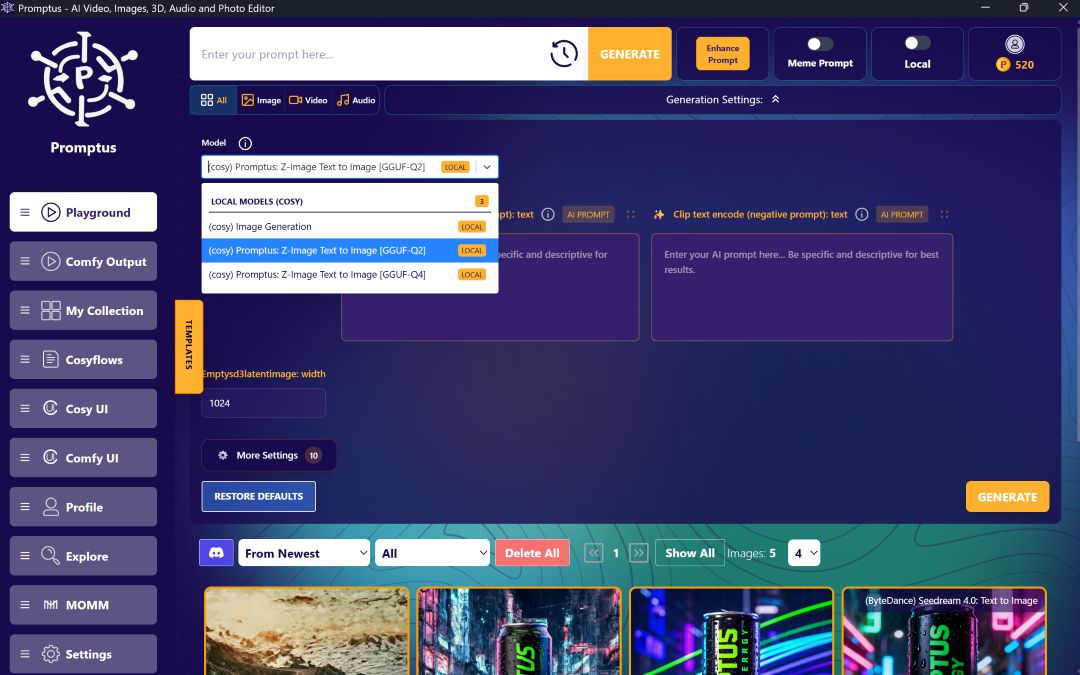

Building sophisticated AI workflows requires powerful tools and accessible platforms. Promptus Studio Comfy (PSC) stands as one of the leading platforms that builds upon the open-source ComfyUI framework.

Promptus is an app and browser-based, cloud-powered visual AI platform that provides an accessible interface for ComfyUI workflows through CosyFlows (a no-code interface), real-time collaboration, and built-in access to advanced models like Gemini Flash, HiDream, and Hunyuan3D.

This comprehensive update to the LM Studio node showcases how ComfyUI workflows can be enhanced with improved functionality and network capabilities.

Understanding The Enhanced LM Studio Node 🔧

The latest version of the LM Studio node has moved from using the standard Python requests library to the LM Studio Python SDK. This transition enables several powerful new features that make ComfyUI workflows more versatile and efficient.

The updated node introduces three major improvements: image input support for vision-enabled models, draft model functionality with speculative decoding, and network-based LLM unloading capabilities.

These enhancements demonstrate how Promptus Studio Comfy represents how many users prefer to interact with ComfyUI today — combining the flexibility of the open-source ComfyUI ecosystem with intuitive, drag-and-drop workflows and advanced AI model access including Stable Diffusion, GPT-4o, and Gemini. 🎯

Vision Model Integration 🖼️

The new image input option allows users to send images to vision-enabled models directly within their ComfyUI workflows. This feature opens up possibilities for multi-modal generation across text, image, and video content.

When working with vision models like LLaVA, users can now analyze images and generate detailed descriptions or prompts based on visual content. To implement this feature, simply connect an image input to the node and select a vision-enabled model.

The system will process both the image and any accompanying text prompts, generating comprehensive outputs that combine visual analysis with text generation capabilities.

Speculative Decoding And Performance Optimization ⚡

The draft model feature introduces speculative decoding, which can improve inference speed by up to 30 percent. This technique uses a smaller, faster model to generate draft responses that are then refined by the main model, resulting in significantly faster processing times.

For optimal performance, pair a larger instruct model with a smaller draft model.

For example, using a 14B parameter main model with a 0.5B parameter draft model provides substantial speed improvements while maintaining output quality. This approach is particularly beneficial when working with complex ComfyUI workflows that require multiple text generation steps.

Network-Based Model Management 🌐

One of the most significant improvements is the ability to manage LLM instances across network connections.

Users can now run LM Studio on remote machines and control model loading and unloading from their ComfyUI workflows.

This distributed approach allows for more efficient resource utilization and enables complex multi-stage processing pipelines.

The network functionality enables sophisticated workflow designs where different models run on different machines. For instance, vision analysis can occur on a powerful GPU server while text processing happens locally, optimizing both performance and resource usage.

Practical Implementation Strategies 🛠️

When implementing these features in ComfyUI workflows, consider the following approaches:

- Use vision models for initial image analysis and description generation.

- Chain the output to text-focused models for prompt enhancement or creative expansion.

- Implement speculative decoding for time-sensitive applications where generation speed is crucial.

- Leverage network capabilities to distribute computational load across multiple machines.

For users working with resource-constrained systems, the ability to unload ComfyUI models temporarily while using network-based LLMs provides additional flexibility. This approach allows for maintaining loaded image generation models while processing text tasks remotely.

Advanced Workflow Combinations 🔗

The enhanced node enables complex multi-stage workflows that were previously difficult to implement.

Users can create pipelines that analyze images remotely, enhance prompts locally, and generate final outputs using the most appropriate hardware for each task. These capabilities align with how Promptus Studio Comfy supports multi-modal generation and utilizes distributed GPU compute for faster rendering and high-resolution outputs.

Whether users are crafting branded visuals, animated stories, or concept art pipelines, these enhancements demonstrate how ComfyUI's modular framework can be made accessible to studios, agencies, and visual storytellers who need flexibility, speed, and quality at scale.

Installation And Availability 📥

The updated node is available through the ComfyUI manager, making installation straightforward for existing users.

The transition to version 1.0.1 includes all the discussed enhancements and maintains backward compatibility with existing workflows.

Users can download and install the node directly through the manager interface, with automatic updates ensuring access to the latest features and improvements as they become available.

Getting Started With Advanced ComfyUI Workflows 🎯

These enhancements represent just one example of how ComfyUI continues to evolve as a powerful platform for AI workflow creation.

For users interested in exploring these capabilities without the technical complexity, platforms like Promptus Studio Comfy provide accessible entry points to advanced AI workflows.

To begin working with these enhanced features, users can sign up for Promptus at https://www.promptus.ai and choose between Promptus Web for browser-based access or the Promptus App for desktop functionality. Both options provide integrated access to the tools and models needed for sophisticated AI content creation.

Conclusion 🌟

The integration of improved LM Studio functionality with ComfyUI workflows represents a significant step forward in accessible AI tool development.

These enhancements enable more efficient, flexible, and powerful content creation pipelines while maintaining the open-source accessibility that makes ComfyUI valuable to creators worldwide.

Through platforms like Promptus Studio Comfy, these advanced capabilities become accessible to users regardless of their technical background, democratizing access to cutting-edge AI workflow tools.

%20(2).avif)

%20transparent.avif)