Hunyuan Video 1.5 is one of the best open-source video generation models

Hunyuan Video 1.5 is Tencent’s breakthrough 8.3B-parameter video generation model, engineered to deliver high-quality text-to-video and image-to-video results—while running on consumer-grade GPUs.

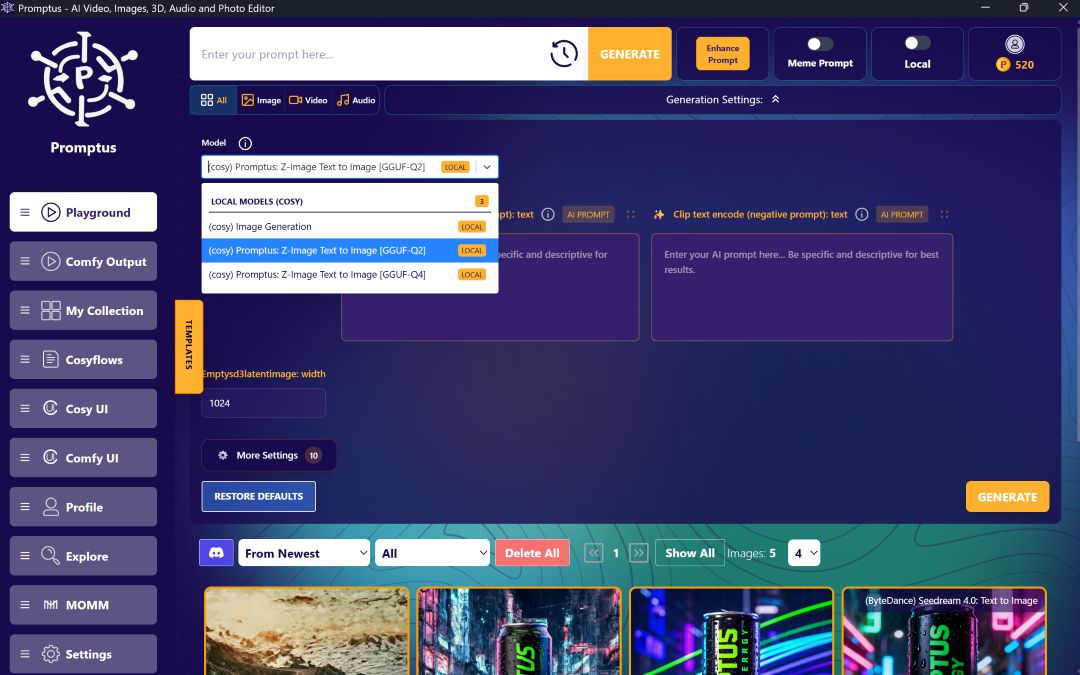

Its open-source availability has also enabled a number of community variants, such as the (cosy) Hunyuan 1.5 family, including GGUF builds that run on as little as 8GB VRAM, and 5G-optimized configurations for extremely fast inference.

Hunyuan Video 1.5 Cosyflow Variants Explained

The community-led cosy releases repackage Hunyuan Video 1.5 for a variety of hardware and use-cases. These versions are not official Tencent builds—they are community conveniences.

(cosy) Hunyuan 1.5 Text to Video

- Default community build.

- Best quality, full precision for the 8.3B model.

- Recommended for 16–24GB VRAM GPUs.

- Same behavior & output quality as the full reference implementation.

(cosy) Hunyuan 1.5 Text to Video (GGUF)

- GGUF quantized version (similar to LLM quantization formats).

- Much smaller RAM / VRAM footprint.

- Ideal for users with 8–12GB VRAM GPUs.

- Slight quality reduction, but considerably faster and lighter.

(cosy) Hunyuan 1.5 Text to Video (8GB VRAM GGUF version)

- Specifically optimized for 8GB NVIDIA GPUs (e.g., RTX 3070, 4060).

- Uses aggressive quantization.

- Trade-offs:

- Lower motion fidelity

- Some artifacts in high-speed camera movement

- Slightly softer visuals

Still remarkably good for its hardware requirements.

(cosy) Hunyuan 1.5 Text to Video (5G)

- “5G” stands for 5 gigabytes VRAM target.

- Ultra-light, aggressively optimized.

- Useful for:

- Notebook GPUs

- Cloud free-tier instances

- Small form factor mini-PCs

- Quality loss is noticeable, but still functional for concept previews or rapid iteration.

Why People Call These Hunyuan Video “Uncensored”

Tencent’s official release is governed by standard content restrictions (similar to Stable Diffusion 1.5 and 3.0). The workflow builds remove restrictive filters, enabling usage without content-blocking:

- No safety classifier checkpoints

- No NSFW auto-masking

- No prompt sanitization

⚠️ This does not alter the underlying model weights. The model has not been trained on explicit content, but now accepts any prompts without blocking.

Hunyuan Video 1.5 uses an optimized multi-stage diffusion pipeline:

Model links

text_encoders

diffusion_models

vae

:open_file_folder: ComfyUI/

├── :open_file_folder: models/

│ ├── :open_file_folder: text_encoders/

│ │ ├── qwen_2.5_vl_7b_fp8_scaled.safetensors

│ │ └── byt5_small_glyphxl_fp16.safetensors

│ ├── :open_file_folder: diffusion_models/

│ │ ├── hunyuanvideo1.5_1080p_sr_distilled_fp16.safetensors

│ │ └── hunyuanvideo1.5_720p_t2v_fp16.safetensors

│ └── :open_file_folder: vae/

│ └── hunyuanvideo15_vae_fp16.safetensorsWorkflow Templates

- Text-to-Video:

video_hunyuan_video_1.5_720p_t2v.json - Image-to-Video:

video_hunyuan_video_1.5_720p_i2v.json

If ComfyUI cannot find the nodes:

- Update to Nightly

- Ensure no extension import failed at startup

- Make sure the template exists in your version

HunyuanVideo 1.5 is currently one of the most accessible, high-quality, and open-source video models available—while its community “cosy” variants unlock uncensored usage and low-VRAM deployments.

Limitations to Expect

Although powerful, HunyuanVideo 1.5 does have a few constraints:

- Videos longer than 10 seconds lose quality

- Fast motion may cause temporal jitter

- Fine detail can “melt” across frames

- GGUF versions reduce fidelity slightly

- Complex object interactions aren’t perfect

%20(2).avif)

%20transparent.avif)