Yes — Promptus runs ComfyUI locally, with GPU server, and workflows into one install. Flip to Offline Mode and everything runs on your machine — no cloud, no subscriptions.

ComfyUI has become a leading choice for creators building AI art, video, and 3D. But a frequent question is: Does it run locally? Yes — and it can run very well — especially when used via Promptus with ComfyUI.

In this article, we'll cover exactly how you can run ComfyUI locally, and what GPU hardware you’ll really need for various popular workflows like SDXL, Flux, AnimateDiff / Wan2.x, upscalers, and inpainting/outpainting.

What It Means to Run “Locally”

Running locally means all processing — generation, model inference, rendering — happens on your own computer, not relying on cloud servers. With Promptus + ComfyUI:

- You download and install everything (the GPU server, models, workflows).

- You toggle on “Offline Mode” so generation doesn’t send data or use external servers.

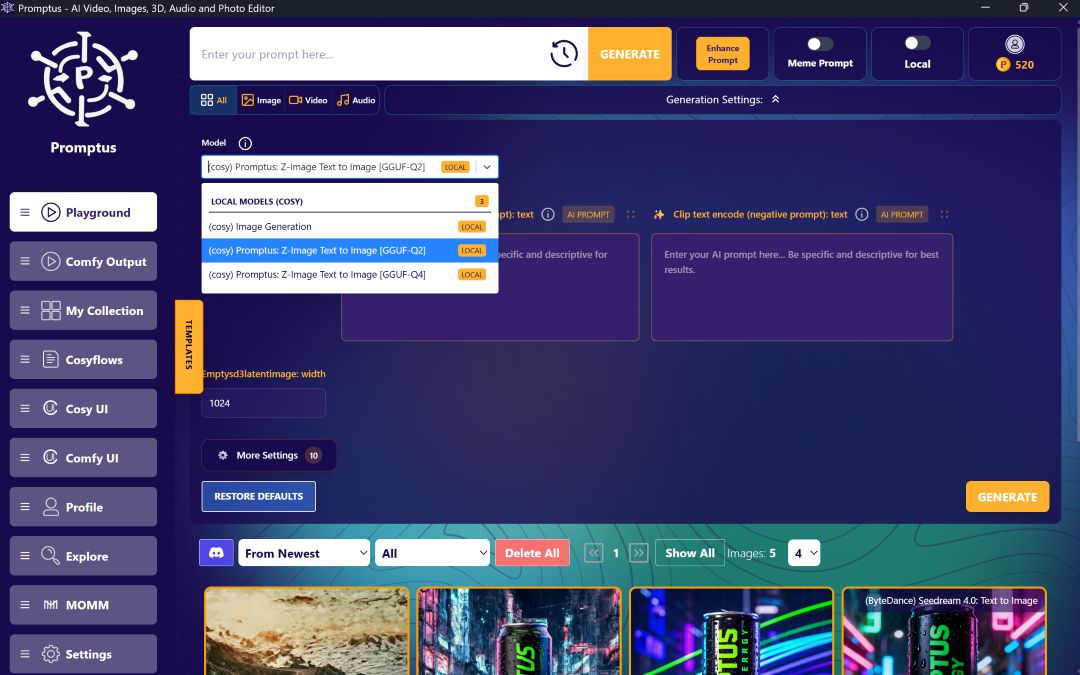

- You control what models you use (SDXL, Hunyuan, Flux, etc.), and which workflows (video, image, 3D).

Top ComfyUI Workflows 🔧

Here are some of the most popular ComfyUI workflows, what they do, and what GPUs people are using (or recommending) for them.

👉 If you want to dive deeper, here’s a list of the best ComfyUI workflows you can try.

ComfyUI Local Setup 🖥️

Here’s how creators typically run ComfyUI locally with Promptus, using the top workflows above:

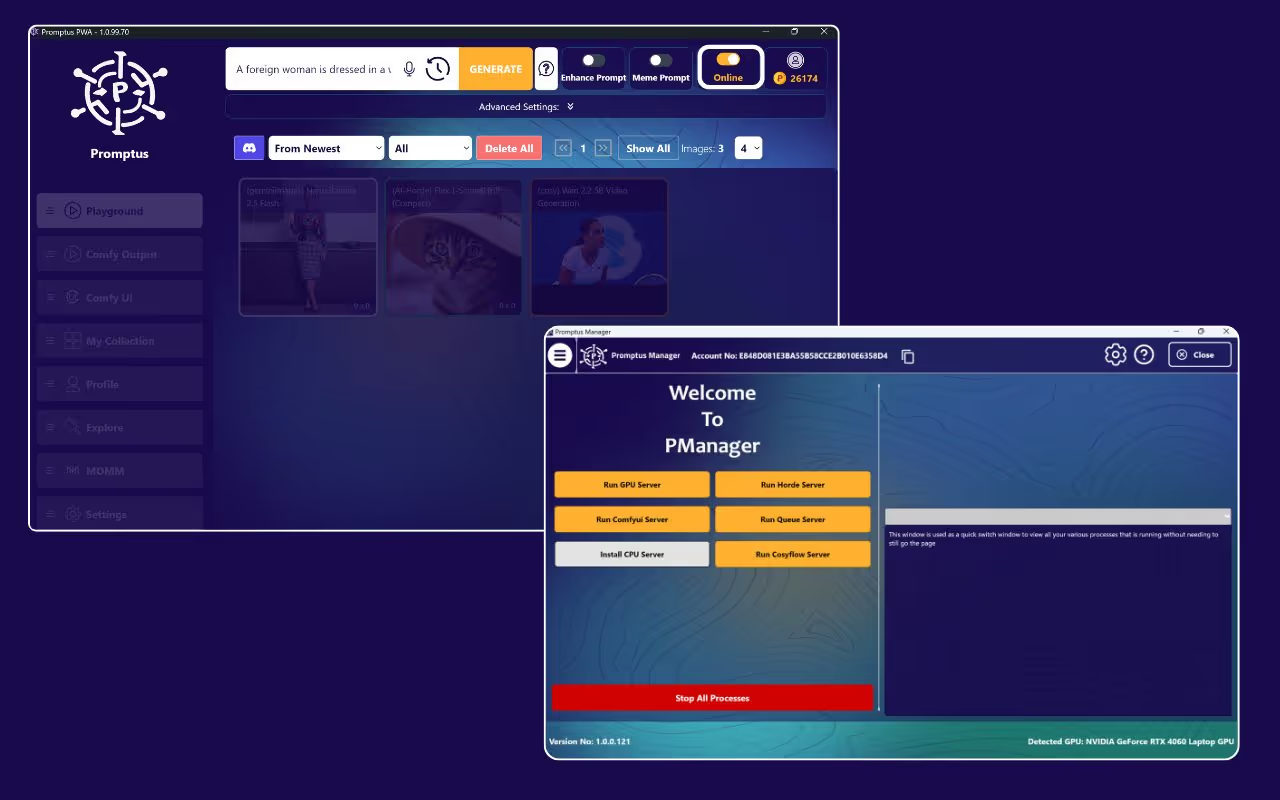

- Download Promptus and install the desktop app.

- In the app, go to Profile → Open ComfyUI Manager.

- Click Run GPU Server → Install. Promptus handles dependencies, including setting up Python, the GPU interface, etc.

- When that’s ready, toggle the app to Offline Mode so everything runs on your hardware (images, video, 3D).

- Download the required models (SDXL, Hunyuan, Flux etc.) so they’re stored locally.

- Select a workflow (for example SDXL image generation, or Wan2.x video) and generate. Promptus lets you pick workflows without manually wiring nodes.

Real-World GPU Examples & Trade-Offs 🔍

- A user trying WAN 2.2 First-Last Frame FP8 workflow reported that to run smoothly, especially for video, an RTX 4090 with 24 GB VRAM is strongly recommended; lower end GPUs are possible with quantized or lower precision models, but quality or speed drops.

- For SDXL at moderate resolution (1024×1024), 12-16 GB VRAM works quite comfortably (for example RTX 3060 or 4070). But once you push to 2048×2048, or want clean refinement (especially faces etc.), you start wanting 20-24+ GB.

- Low VRAM options: The GGUF variant of Wan2.2 Image-to-Video models can run on systems with less than 12 GB VRAM — but you’ll need to accept limitations (lower resolution, possibly slower rendering, lower batch sizes).

So — Does ComfyUI Run Locally, Really? ✅

Yes, absolutely. And with tools like Promptus, more people than ever are running it locally as their primary setup. If you have a GPU with 12-16 GB of VRAM, great.

If you have less, you can still run many workflows (especially upscaling, inpainting, simpler image generation) but expect slower speeds or lower resolution outputs.

For heavy video and 3D, 24+ GB starts to become very useful or even necessary.

Tips for Getting the Best Local Performance

- Use FP16 / quantized models when possible (helps reduce VRAM usage).

- Start with moderate resolutions (1024 × 1024) rather than going straight to 4K.

- Have fast SSD storage for models. Slow drive = long load times.

- Keep system RAM — 16-32 GB is helpful for holding data and handling large models.

- Keep drivers & ComfyUI / Promptus up to date; sometimes optimizations drop in that reduce VRAM requirement.

Summary 🔎

- ComfyUI does run locally, especially when used through Promptus.

- You can run SDXL, Hunyuan, Flux, AnimateDiff/Wan2.x, upscaling, and inpainting workflows locally — the key is having enough GPU memory and using optimized workflows when needed.

- Promptus simplifies the setup: download, install GPU server, switch to offline mode, pick workflow, generate.

%20(2).avif)

%20transparent.avif)