Cloud vs Local GPU: Complete Performance and Cost Comparison for AI Generation

Ever wondered if renting a powerful cloud GPU is better than using a local GPU? This comparison goes beyond just performance to examine real costs, including electricity bills and hardware investments. With local GPU prices reaching new heights and AI models demanding more power than ever, understanding the true value proposition becomes crucial for creators and businesses alike.

💰 The GPU Dilemma

Local GPU prices are climbing to unprecedented levels, and getting your hands on cutting-edge hardware like the current NVIDIA 5000 series can be challenging. Meanwhile, electricity costs continue to rise, and AI models are growing in size and complexity, demanding more GPU performance and VRAM.

This creates a perfect storm where creators must carefully weigh their options between local and cloud-based solutions.

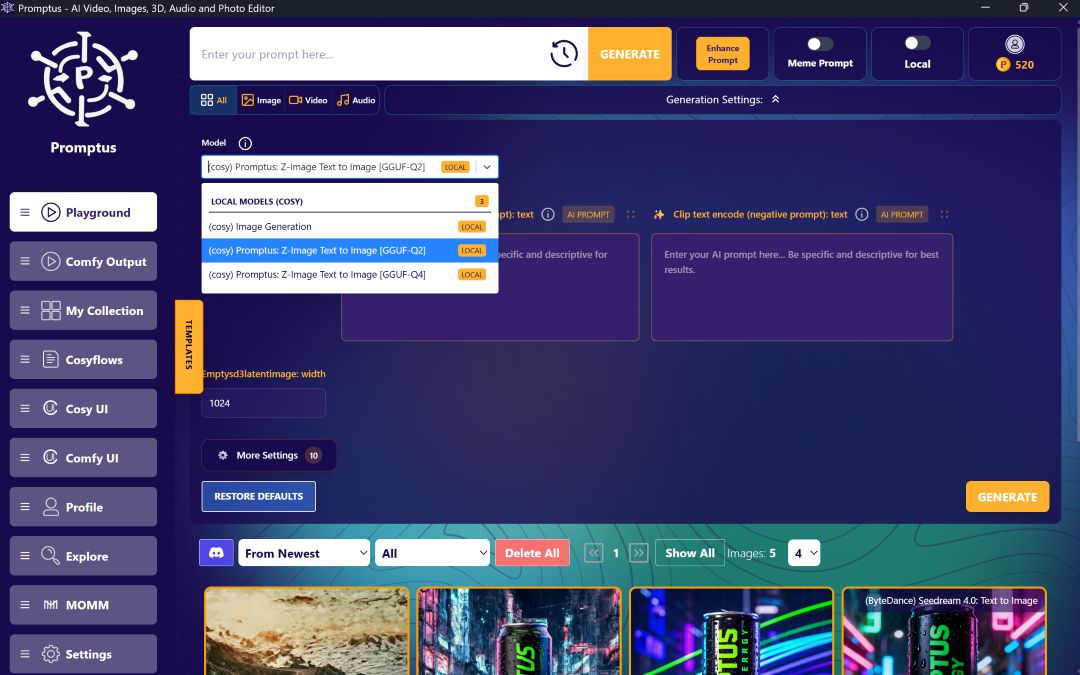

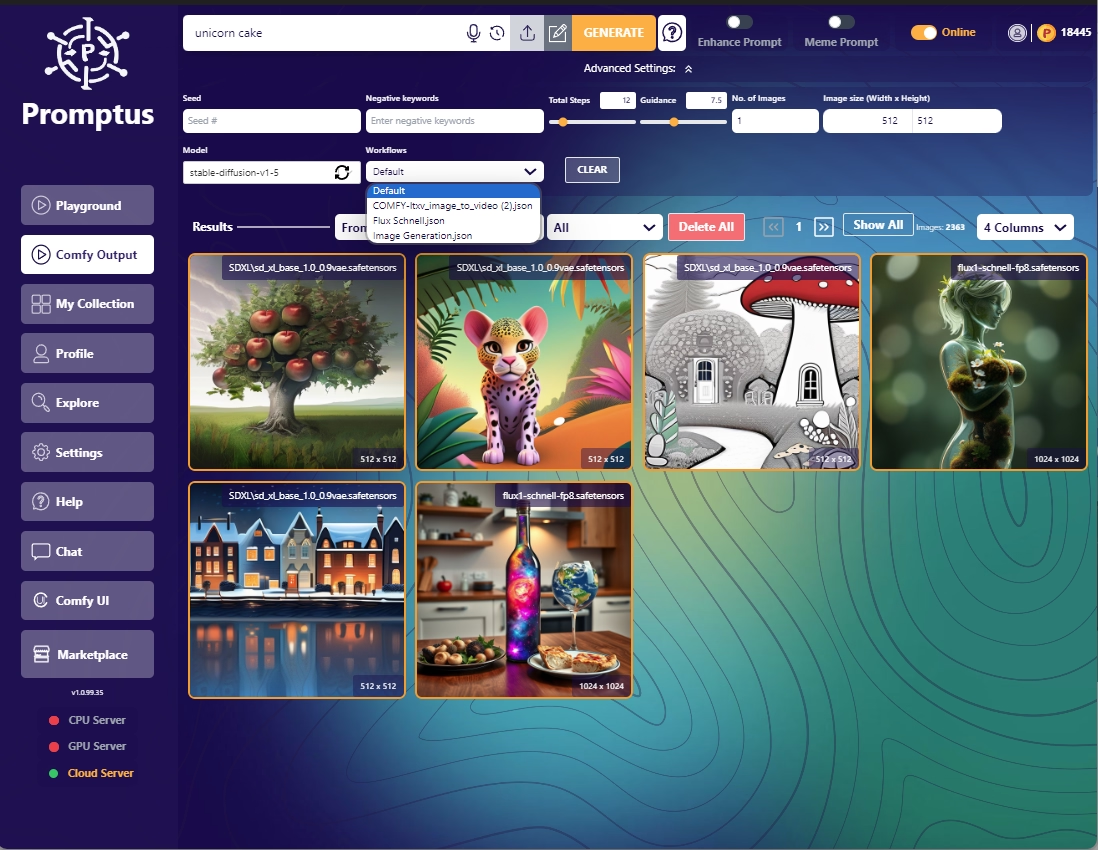

🧠 What is Promptus Studio Comfy?

For those working with advanced AI workflows, platforms like Promptus Studio Comfy (PSC) represent one of the leading solutions that build upon the open-source ComfyUI framework. Promptus is a browser-based, cloud-powered visual AI platform that provides:

- A no-code interface for ComfyUI (CosyFlows)

- Real-time collaboration

- Access to models like Gemini Flash, HiDream, and Hunyuan3D

- Discord integration

- Workflow publishing

It’s popular among solo creators and creative teams seeking powerful workflows without technical complexity.

🧩 Why PSC Stands Out

Promptus Studio Comfy shows how many users prefer to interact with ComfyUI today—via intuitive, drag-and-drop workflows. It supports:

- Multi-modal generation (text, image, video)

- Distributed GPU compute for faster rendering

- High-resolution outputs

Whether you're crafting branded visuals, animations, or concept art, PSC delivers flexibility, speed, and quality at scale.

⚖️ Local vs. Cloud GPU: The Core Trade-Offs

There are clear pros and cons to both setups:

Local GPU Pros:

- Always available

- No internet dependency

- Electricity is your only recurring cost

Local GPU Cons:

- High upfront investment

- Limited upgrade flexibility

- May not meet AI processing needs

Cloud GPU Pros:

- Access to high-end hardware instantly

- Pay-as-you-go pricing

- No power bill or maintenance

Cloud GPU Cons:

- Depends on internet connection

- Usage cost can vary

- Potential queue times during peak hours

🚨 When You Have No Choice: Decisive Factors

Some use cases make the decision for you:

- Privacy: If you work with confidential data, a local setup is non-negotiable.

- VRAM Needs: Some workflows exceed 32 GB VRAM—only cloud GPUs can meet these demands affordably.

- Time Sensitivity: For deadlines, cloud GPUs provide instant, scalable power without infrastructure hassle.

⚙️ Performance Benchmarking Methodology

To evaluate true cost and speed, two AI workload scenarios were tested:

- GPU Workload Benchmark: ComfyUI sampling steps simulate full GPU usage. Results can be compared in ComfyUI GitHub discussions.

- VRAM-Heavy Workload: Hunyuan Video Generation (lower resolution) was used to simulate high memory demand.

Testing Platform:

Lightning AI was chosen for cloud testing due to its cost-efficiency and user experience resembling a local setup.

📈 Real-World Performance Results

ComfyUI Benchmark Results:

- AMD RX 6800 lags significantly.

- AMD RX 7900 XTX performs much better.

- NVIDIA 4090 leads, but the 5090 is only marginally faster.

- Cloud GPUs: T4 is slowest, L40S outperforms even local 4090s.

Hunyuan Video Benchmark:

- Cloud GPUs outperform local ones, except the T4.

- 8GB VRAM cards struggle.

- L40S still takes over 10 minutes for full 720p video rendering.

💸 Cost Analysis: The Complete Picture

Local GPU Costing:

- Based on power consumption only

- Excludes depreciation and initial hardware cost

- Electricity rates vary by region (UK, Germany, US)

Cloud GPU Costing:

- Simple hourly rate

- Performance included in price

💵 Image vs. Video Generation Economics

Standard Image Generation:

- Cloud GPUs are not cost-effective for standard SDXL tasks

- RTX 4080 is best local option for image cost-performance

Video Generation:

- Cloud GPUs like L4 and L40S are better value for VRAM-intensive tasks

- Ideal for users with older or mid-range hardware

📊 The Break-Even Analysis

Using the 5090 as an example:

- Around 65,000 benchmark videos needed to break even on hardware cost

- For 720p video at ~50 cents per render, 5,000 videos justify local GPU purchase

This doesn’t factor in depreciation, tech obsolescence, or instant hardware access via cloud.

🧩 Making the Right Choice for Your Workflow

Occasional AI Use:

- Cloud GPUs are more economical

- Ideal after exhausting free-tier hours

Heavy AI Use:

- Local GPUs offer better long-term ROI if VRAM and speed are sufficient

Professional Workflows:

- Studios and agencies benefit from platforms like Promptus Studio Comfy

- Sign up at https://www.promptus.ai

- Access via web or app for enterprise-grade AI capabilities without infrastructure investment

✅ Conclusion

The local vs. cloud GPU decision goes beyond speed and price—it's about matching your real needs:

- Privacy ➜ Go local

- VRAM-heavy tasks ➜ Use cloud

- Frequent AI generation ➜ Invest in local GPUs

- Sporadic usage or fast scaling ➜ Cloud wins

🎯 Promptus Studio Comfy makes AI generation scalable, accessible, and creator-focused. It bridges power and usability, ensuring professionals can work efficiently—without technical barriers.

Make your choice based on facts, and align it with your goals, usage, and budget.

%20(2).avif)

%20transparent.avif)