Bringing Multiple AI Voices to Life with MultiTalk + WAN 2.1

Wan 2.1 Lip Sync in Promptus ComfyUI Just Got a Massive Upgrade!

In this video, we explore the latest evolution of AI-driven talking avatars: the MultiTalk Lipsync model now fully integrated into the WAN 2.1 video framework, delivering multi-character AI conversations that feel impressively natural.

Wan 2.1 new multi talk features

- Multi-audio support for up to four unique AI voices in one video.

- A sleek workflow combining:

- Ollama (or other LLMs) to structure conversational scripts.

- Chatterbox Dialog TTS, including voice cloning demos with both short and longer samples.

- Updated WAN Video for proper video lip-sync and face animation.

- Segment Anything + Prepare Mask/Image Groups to isolate characters for accurate visual control.

- Speaker diarization to identify which AI character is speaking when.

This pipeline runs seamlessly locally or on cloud GPUs, empowering creators to build their own AI-hosted podcasts, interviews, and multi-character storytelling—right from ComfyUI.

🔍 Real-World Testing & Performance

We don’t just demo — we test. You’ll see a 3‑minute demo using two voices, split cleanly into separate audio tracks. Using the WAN 2.1 framework, the video renders 1,500 frames in chunks (~81 frames each), taking around 19 minutes. You’ll observe the smooth flow of animation, realistic background details, and areas that still need fine-tuning—like occasional mouth flicker or body movement when one avatar speaks.

🎯 Where It Shines (and Where It’s Still Rough)

✅ Pros:

- Engaging, podcast-style conversational flow

- Clean separation of voices → cleaner lip-sync

- Background scene presence

- Runs offline with GGUF quantization support

⚠️ Cons:

- Occasionally both avatars move during a single voice

- Facial sync not always perfect

- The system doesn’t always assign mask-to-audio correctly

One clear takeaway? There's a strong need for future improvements such as explicit mask-to-audio assignment in multi-character setups—similar to Cling AI or Runway’s approach.

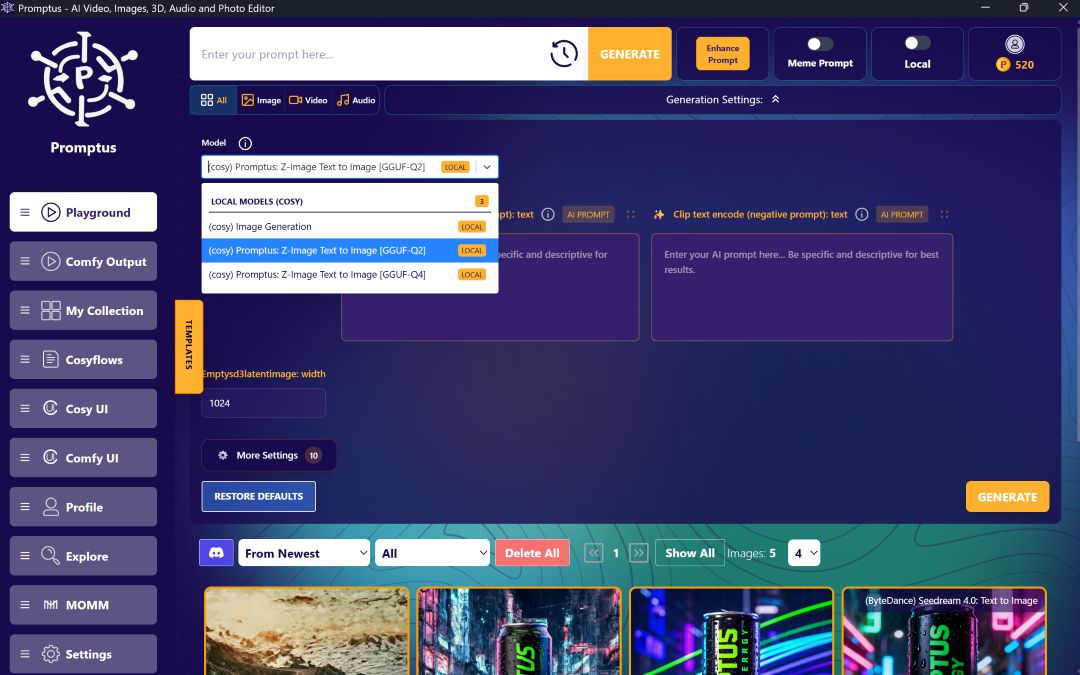

Promptus App - Buy Once, Create Locally

This full pipeline is built into the Promptus platform, which offers powerful ComfyUI-based tools for AI image, video, and audio generation. Best of all, there's a one-time purchase option ($49 lifetime license) without monthly fees. That single payment grants:

- Full offline access to WAN 2.1 and all Cosyflows

- 10,000 bonus credits

- ComfyUI interface

- Lifetime updates and support

Or, there are optional monthly subscription tiers for creators needing ongoing cloud GPU access,

🔧 Who Should Watch This?

- AI developers building multi-character avatars

- Content creators exploring AI-hosted podcasts or interviews

- Technologists mastering Ollama, TTS, segmentation, and lip-sync models

This tutorial isn't just a walkthrough — it's a realistic look at the pipeline's strengths and weaknesses, giving you the tools to decide if this is right for your next project.

🔗 Resources & Links

- WAN 2.1 Video Workflows

- Get the Promptus app buy once – one-time $49 with 10k credits included!

Dive in, experiment, and let us know how it goes!

%20(2).avif)

%20transparent.avif)