Generate AI Videos with Wan 2.1

Wan 2.1, Alibaba's revolutionary video generation model, has been released to the public completely free. This breakthrough AI tool lets you create videos on your own computer, similar to how you generate images, but with superior physics comprehension and visual coherence compared to previous models.

While the official installation can be complex and resource-intensive, using ComfyUI with specialized workflows makes the process much simpler. Though the official model requires substantial RAM, community-created compressed versions deliver excellent quality on standard home computers.

What Makes Wan 2.1 Special

Wan 2.1 stands out from other video generation models with its advanced understanding of physics and superior visual coherence. The model supports both English and Chinese prompts and offers both image-to-video and text-to-video capabilities. You can try it online through the official Hugging Face page, though processing times can be slow.

ComfyUI for Video Generation

Before installing Wan 2.1, you need ComfyUI as your tab in the Promptus app. This requires a basic Promptus with ComfyUI installation with the ComfyUI Manager plugin. The manager is essential for installing custom workflows and managing extensions.

Promptus allows ComfyUI to interface with Wan video models seamlessly. The same developer has also created optimized, compressed models available on Hugging Face in various formats suitable for most PCs.

Installing the Wan Video Models

Open ComfyUI Manager and navigate to Custom Nodes Manager. Search for wan video and install the version by Kid AI, selecting the nightly build for the latest features.

If installation fails, you may need to adjust security settings. Navigate to your ComfyUI folder, then user/default/ComfyUIManager, and open config.ini in a text editor. Change the security level to "weak," save the file, and restart ComfyUI before attempting installation again.

After successful installation, restart ComfyUI to complete the setup process.

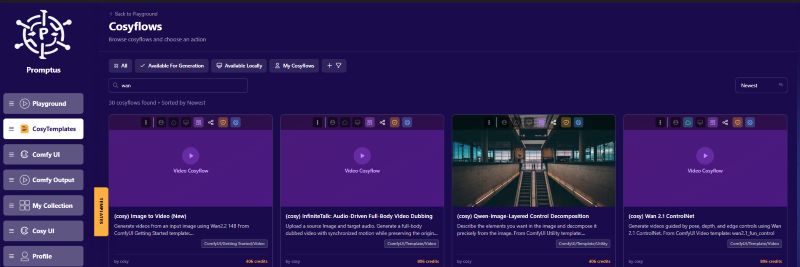

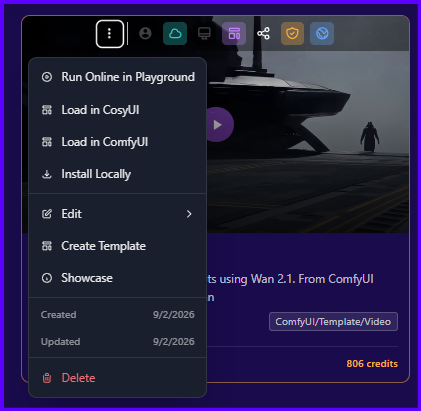

Alternatively, you can use the CosyTemplates in Promptus as in the picture above and find the Wan 2.1 workflows. Click install locally and Promptus will automatically download all the files.

Downloading and Setting Up Workflows

From the ComfyUI WanVideo page, access the example workflows section. Download the 480p image-to-video example by clicking "download raw file" and dragging it into ComfyUI.

The system will indicate missing nodes. Use ComfyUI Manager to install missing custom nodes automatically. This typically includes two additional components that need installation before proceeding.

Required Model Downloads

You'll need several model files for complete functionality:

UMT-5 text encoder: Download and place in ComfyUI/models/text_encoders

Open CLIP visual encoder: Download and place in ComfyUI/models/clip folder

VAE model: Create a "wan_video" folder in the VAE directory and place the file inside

Main Wan video model: Create a "wan_video" folder in diffusion_models and place the 17GB model file inside

Note that some file names may have changed slightly, requiring minor adjustments in the workflow settings.

Generating Your First Video

Once all components are installed, load your input image in the bottom left of the workflow. The system automatically resizes images and processes them through the Wan video pipeline at 16 FPS.

Enter your text prompt describing the desired action or transformation. For example, "person spins around" for a 360-degree rotation or "man makes silly face" for facial expressions.

Click queue to begin processing. Generation time varies significantly based on your system specifications, ranging from several minutes to much longer on slower hardware.

The completed video appears directly in the ComfyUI interface as an MP4 file, with the decoder stage providing the final output.

Performance and Quality Considerations

The 480p model provides good results for most users, while the 720p model offers superior quality at the cost of longer processing times and higher system requirements. The compressed community models strike an excellent balance between quality and performance for home users.

Processing times depend heavily on your GPU and system specifications. More powerful hardware significantly reduces generation time, while older systems may require patience for optimal results.

Tips for Better Results

- Use clear, descriptive prompts that specify the desired action or movement

- Start with the 480p model to test your setup before moving to higher resolutions

- Ensure your input images are well-lit and clear for better video generation

- Experiment with different prompt styles to achieve your desired visual effects

The Future of AI Video Generation

Wan 2.1 represents a significant advancement in accessible AI video generation. The combination of free availability, community optimization, and user-friendly interfaces like ComfyUI makes professional-quality video generation available to everyone.

As the technology continues evolving, we can expect even better performance, quality, and ease of use. The active community development ensures continued improvements and optimizations for home users.

This democratization of AI video generation opens new possibilities for content creators, educators, and anyone interested in exploring the cutting edge of artificial intelligence applications.

Level up your team's AI usage—collaborate with Promptus. Just be an AI workflow creator.

.avif)