Build a complete ComfyUI Wan 2.1 image to video workflow from scratch. This guide focuses on the native ComfyUI implementation that works seamlessly with the classic K sampler

In this comprehensive tutorial, we'll build a complete ComfyUI Wan 2.1 image to video workflow from scratch. This guide focuses on the native ComfyUI implementation that works seamlessly with the classic K sampler, making video generation accessible and straightforward for creators at any level.

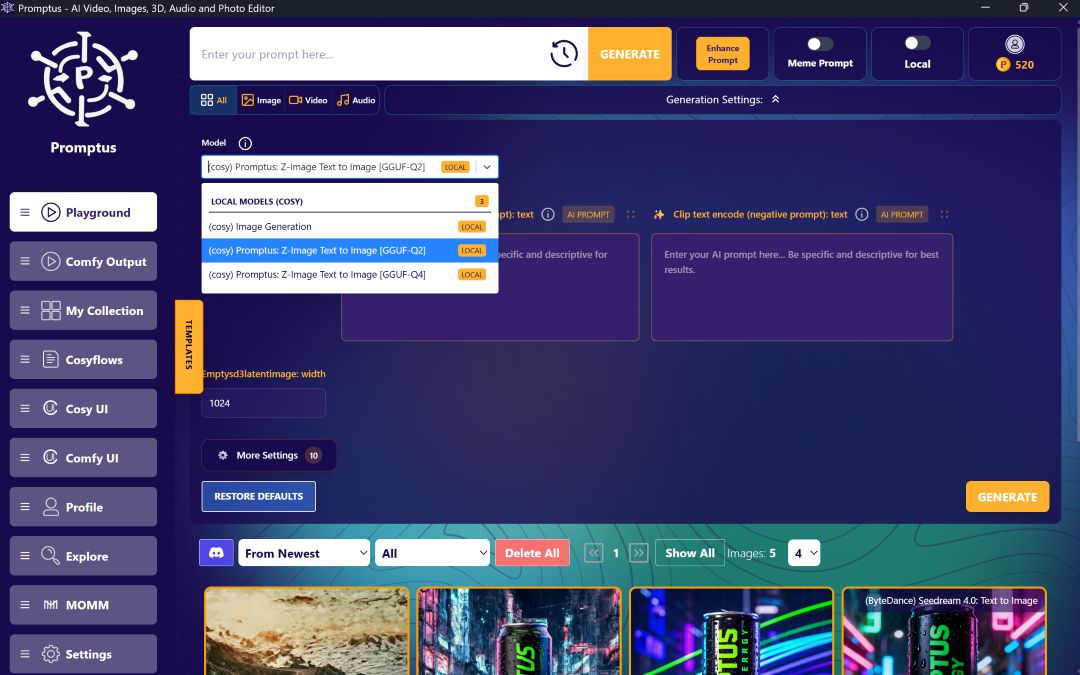

Prerequisites & Set-up Requirements

Before starting with Wan 2.1, you must update ComfyUI to the latest version. Without this update, the Wan 2.1 nodes won't be available regardless of which workflow you're trying to use.

The workflow requires several specific models that need to be placed in the correct directories within your ComfyUI installation:

Required Models:

- Wan 2.1 Image to Video model (14B FP8E4 version recommended for better performance)

- UMP T5XXL text encoder (similar to Flux's T5XXL but supports multiple languages)

- Wan 2.1 VAE

- Clip Vision H model

Directory Structure:

- Main models go in: ComfyUI/models/diffusion_models/

- Text encoders go in: ComfyUI/models/text_encoders/

- VAE files go in: ComfyUI/models/vae/

- Clip vision models go in: ComfyUI/models/clip_vision/

Building the Core Workflow

Start with the basic foundation by loading your main model. The workflow begins similarly to traditional image generation setups but includes specific video preprocessing components.

Basic Node Setup:

1. Load the Wan 2.1 main model

2. Add positive and negative prompt inputs (colored green and red respectively)

3. Connect the VAE decoder and VAE loader

4. Add the clip loader using the UMP T5XXL model

The key difference from standard workflows is replacing the empty latent image with the "Image to Video" preprocessor node. This node takes information from the left side of your workflow and prepares it for the K sampler.

Video-Specific Components:

- Image to Video preprocessor node

- Clip Vision Encode node

- Clip Vision Loader (using clip vision H)

- Image input for your starting frame

- Video Combine node for final output

Understanding the New Text Encoder

The UMP T5XXL text encoder functions like a large language model, allowing you to write prompts in natural sentences rather than comma-separated tags. This encoder supports multiple languages including Chinese characters and brings back negative prompting capability that wasn't available in some other recent models.

You can describe your video using conversational language while still maintaining precise control over the generation process

CONFIGURING VIDEO PARAMETERS

The workflow includes several important video-specific settings:

Frame Settings:

- Default frame count: 65 frames

- Frame rate: typically 16 FPS (adjustable)

- Resolution: match your input image dimensions when possible

- Batch size: number of video variations to generate

Sampler Configuration:

- DDIM scheduler recommended for video (less stiff than Euler)

- Standard sampling steps apply

- CFG scale works similarly to image generation

PROMPTING TECHNIQUES FOR VIDEO GENERATION

Effective video prompting differs from static image prompting. Focus on describing camera movement, subject behavior, and environmental factors.

Camera Control Examples:

- "Static camera shot" - minimal camera movement

- "Camera pans to close-up" - directed camera movement

- "Tracking shot" - follows subject movement

- "Crash zoom" - rapid zoom to specific element

Subject Direction:

- Describe what you want the subject to do

- Mention environmental interactions

- Specify if elements should remain still

- Detail any repetitive motions like "pulsating" or "breathing"

Environmental Details:

- Describe atmospheric effects like bubbles, smoke, or particles

- Mention lighting changes or effects

- Include background activity or movement

OPTIMIZING PERFORMANCE AND QUALITY

The FP8E4 model version provides a good balance between quality and system requirements. The 14B parameter model at 6.74GB offers reasonable performance on most modern systems while maintaining good output quality.

Performance Tips:

- Start with lower frame counts for testing

- Use the FP8 quantized versions for better performance

- Match input image resolution to avoid unnecessary processing

- Consider batch processing for multiple variations

Quality Considerations:

- Videos longer than 6-8 seconds may experience morphing issues

- Keep important subjects within frame boundaries

- Use consistent lighting in source images

- Consider the relationship between frame rate and video length

TROUBLESHOOTING COMMON ISSUES

When elements don't behave as expected, refine your prompts with more specific language. If the camera moves too much despite "static" instructions, add "remaining still" or similar stabilizing phrases.

For missing environmental effects like bubbles or particles, double-check your spelling and try alternative descriptive terms. The model responds well to detailed descriptions of what should be happening in the scene.

ADVANCED WORKFLOW CUSTOMIZATION

The basic workflow can be extended with additional preprocessing nodes, upscaling components, or multiple video outputs. LoRA integration is possible but works better when applied to source images rather than directly in the video generation process.

Consider creating keyframe sequences outside the video generator and using this workflow to animate between them for longer-form content.

CONCLUSION

ComfyUI Wan 2.1 represents a significant advancement in open-source video generation, offering capabilities that rival closed-source alternatives. The native integration with ComfyUI's node system makes it accessible while providing the flexibility to customize workflows for specific needs.

The combination of natural language prompting, negative prompt support, and the familiar K sampler interface creates a powerful yet approachable video generation system. As open-source video generation continues evolving rapidly, mastering these foundational techniques positions creators to take advantage of future developments.

Call to Action: Level up your team's AI usage—collaborate with Promptus. Be a creator at https://www.promptus.ai

%20(2).avif)

%20transparent.avif)